Could this be the cure for AI hallucination?

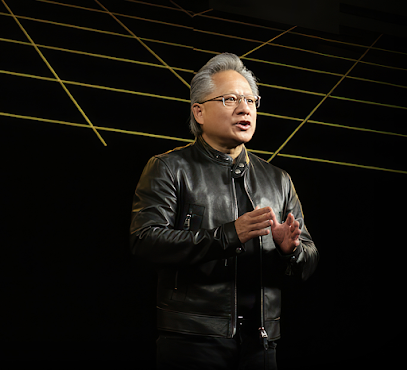

Artificial intelligence (AI) is a rapidly growing industry that is transforming various sectors with its advanced technology. However, AI is not without its problems. One issue is the tendency for large language models (LLMs) to make up information, also known as "hallucination," which can lead to incorrect facts and harmful subjects. To address this issue, Nvidia, a leading producer of graphics processors used for AI applications, has developed a new software called NeMo Guardrails.

NeMo Guardrails is a layer of software that sits between the user and the LLM, heading off bad outcomes or prompts before the model spits them out. Nvidia's new software can add guardrails to prevent the software from addressing topics that it shouldn't, forcing an LLM chatbot to talk about a specific topic, head off toxic content, and prevent LLM systems from executing harmful commands on a computer. The software is open source and offered through Nvidia services, and it can be used in commercial applications.

One possible use case for NeMo Guardrails is in customer service chatbots. Developers can use Nvidia's software to prevent chatbots from getting "off the rails," which can lead to nonsensical or even toxic responses. For example, if a customer service chatbot is designed to talk about a company's products, it probably shouldn't answer questions about its competitors. With NeMo Guardrails, developers can steer the conversation back to the preferred topics.

Nvidia's software is also useful in preventing LLM models from accessing information they shouldn't have. For example, a chatbot that answers internal corporate human resources questions can have guardrails added so that it doesn't answer questions about the company's financial performance or access private data about other employees. The software is also able to detect hallucination by asking another LLM to fact-check the first LLM's answer. If the model isn't coming up with matching answers, it returns "I don't know."

NeMo Guardrails is written in the Golang programming language, and programmers can use it to write custom rules for the AI model. The announcement of the software also highlights Nvidia's strategy to maintain its lead in the market for AI chips by developing critical software for machine learning. Nvidia provides the graphics processors needed to train and deploy software like ChatGPT, and it currently has more than 95% of the market for AI chips.

Other AI companies, including Google and OpenAI, have used a method called reinforcement learning from human feedback to prevent harmful outputs from LLM applications. This method uses human testers to create data about which answers are acceptable or not, and then trains the AI model using that data. However, Nvidia's NeMo Guardrails offers a different approach to the problem.

NeMo Guardrails is one example of how the AI industry is addressing the "hallucination" issue with the latest generation of large language models. Nvidia's software offers a way to add guardrails to prevent LLM models from saying incorrect facts, talking about harmful subjects, or opening up security holes. The software can be used in customer service chatbots, preventing them from getting "off the rails," and can be used to prevent LLM models from accessing information they shouldn't have. As AI continues to transform various sectors, software like NeMo Guardrails will play an increasingly important role in ensuring the accuracy and safety of AI applications.

Comments

Post a Comment