Meta Platforms Crafts its Own Chip

Meta Platforms, formerly known as Facebook, has unveiled its homegrown AI inference and video encoding chips at the AI Infra @ Scale event. The company, being a pure hyperscaler and not a cloud infrastructure provider, has the freedom to design and create its own hardware to support its software stacks. By developing its own chips, Meta Platforms aims to optimize performance, reduce costs, and address the increasing demands of AI workloads.

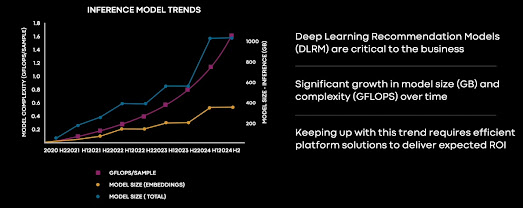

Meta Platforms' custom silicon team, comprised of key executives from Intel and Broadcom, has introduced the Meta Training and Inference Accelerator (MTIA) v1. This chip is specifically designed to handle the computational and memory requirements of deep learning recommendation models (DLRMs), which utilize embeddings for context representation. The growth in size and demand of DLRMs has surpassed the capabilities of existing solutions such as CPUs and neural network processors for inference (NNPIs), necessitating the use of GPUs for inferencing.

However, GPUs are not specifically designed for inference and can be inefficient and expensive for deploying and operating real models. Meta Platforms recognized the need to take control of its silicon destiny, much like it did with the Open Compute Project in 2011, and developed its own AI inference chip. The MTIA v1 chip is based on a RISC-V architecture and features 64 processing elements with 128 MB of SRAM memory. It utilizes low power DDR5 (LPDDR5) memory for external storage, enabling it to handle large embeddings required for DLRMs.

The MTIA v1 chip incorporates a leaf/spine network of PCI-Express switches in the Yosemite server, allowing for connectivity between the MTIA chips, host systems, and host DRAM. With a power consumption of 780 watts per system, the MTIA platform can deliver 1,230 teraops of performance. When compared to Nvidia's DGX H100 system, the MTIA platform achieves similar performance levels at a significantly lower power consumption, indicating potential cost advantages.

Meta Platforms' engineering director, Roman Levenstein, highlighted that the MTIA chip outperforms GPUs by up to two times in terms of performance per watt on fully connected layers, which are critical for DLRMs. While the MTIA device is not yet optimized for high-complexity DLRM models, its performance per watt is promising across low, medium, and high complexity models.

As Meta Platforms continues to refine and optimize its MTIA chip, there is speculation about its potential for open sourcing the RTL (register-transfer level) and design specifications. Considering Meta Platforms' history of supporting open-source software and hardware, embracing the RISC-V architecture for its custom chip aligns with its philosophy.

The introduction of Meta Platforms' homegrown AI inference chip signifies the company's commitment to advancing its AI capabilities, reducing reliance on third-party components, and potentially disrupting the semiconductor market. As Meta Platforms expands its silicon expertise, it could potentially offer its own chips to other companies, creating new opportunities and shaking up the industry landscape.

Comments

Post a Comment